We see more discussion of predictive algorithms to judge people in criminal justice, to analyze whether / how to grant probation, bail, etc.

But there are serious risks of False Positives, or racist/ biased algorithms.

What standards will we use to evaluate proposals for use of these algorithms? And how do we build these tools to have less racist assumptions baked into them?

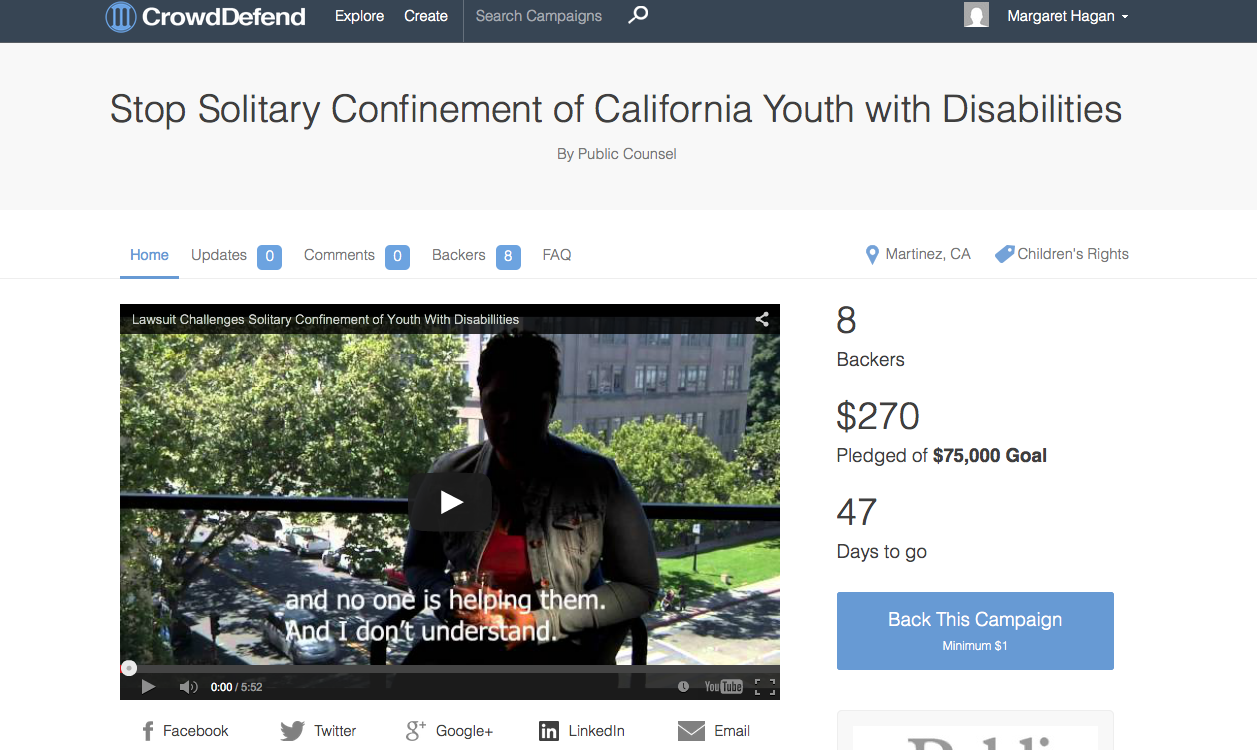

We need more transparent, accessible exposure of these tools. More people in the public must understand what’s going on -so there can be more of a social consensus about it.